Second, we need to decide the criterion for rejecting the null hypothesis. Once you are done with this example, you may want to continue reading this example on estimating and reporting the effect size following a cluster-based test. 100%) between the two hypotheses can be construed as the effect size, which has great influence on power: statistical power tends to be greater with larger effect size. Only with these parameters specified can data be sampled from the distributions. And under the alternative hypothesis, we will assume that the coin has a chance of 100% to land on head, based on the result our observations so far. In the current example, the chance of a coin to land on head under the null hypothesis is undoubtedly 50%. Using this distribution, we can simulate the outcome of each experiment (i.e., the number of heads out of a certain number of tosses).įirst, we need to “guess”, based usually on pilot studies or prior similar studies, the key parameters of the binomial distributions for each of the null and alternative hypotheses. We know the outcome of tossing a coin conforms to a binomial distribution. Luckily, we don’t really have to toss a coin, as MATLAB can simulate the results for us. However, even a simple task such as coin-tossing will be very time-consuming and tedious when you need to repeat it 5000 times. By definition, we can calculate power by doing the same experiment a large number of times (e.g., 5000 times), and then calculating the proportion of the number of times in which null hypothesis can be rejected to 5000. The power of a statistical test quantifies how sure we can be to decide the coin is unfair (i.e., rejecting the null hypothesis).

We can clarify this using inferential statistics. But still, we don’t know how sure we are about our decision to call the coin unfair. But intuitively, we will be more confident of the coin’s unfairness following Experiment 2 than following Experiment 1. With either experiment, we are inclined to think the coin is unfair. In Experiment 1, we tossed it 2 times, and observed 2 heads In Experiment 2, we tossed it 5 times and observed 5 heads. Consider the following two experiment scenarios. We can test it by running coin-tossing experiments. Suppose we are presented with a coin and we want to know whether it is fair (50% chance lands on head). For a more detailed and excellent introduction, please see Dr. Let’s start by a brief introduction to estimate sample size using an intuitive example of coin-tossing. Power analysis through simulations – an intuitive example of coin-toss

#Gpower effect size f how to

Then, we will demonstrate how to estimate sample size through simulations for t-tests and cluster-based permutation tests. In this example, we will first briefly introduce how to estimate sample size through simulations using an intuitive example of coin-tossing. Two demo files demonstrating how to use the functions are in the demo folder: sampleSize_timefreq.m is for time-frequency data.The MATLAB functions are stored in the functions folder.

#Gpower effect size f download

Please cite this paper where appropriate.įrom this OSF project, you can download the functions and the corresponding demo files. The functions were written for, and first used in Wang and Zhang (2021). The experiment can be of a with-subjects, between-subjects, or mixed design. These functions can be used for EEG/MEG research involving t-test between two conditions, one-way ANOVA with three or more conditions, or 2×N interactions. Here, we demonstrate two easy-to-use MATLAB functions that use simulations to estimate the sample size for cluster-based permutation tests. For EEG and MEG we often use a cluster-based permutation test, which is a non-parametric test that exploits the multivariate structure in the data.

#Gpower effect size f software

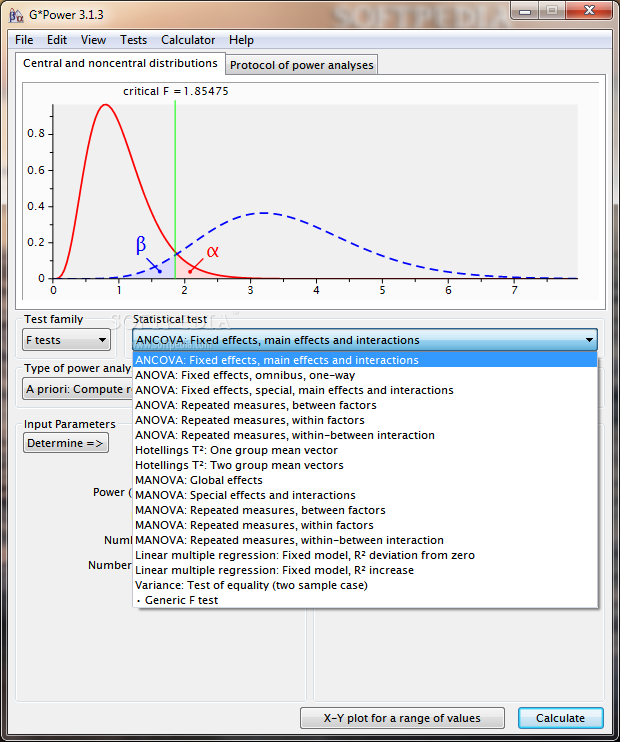

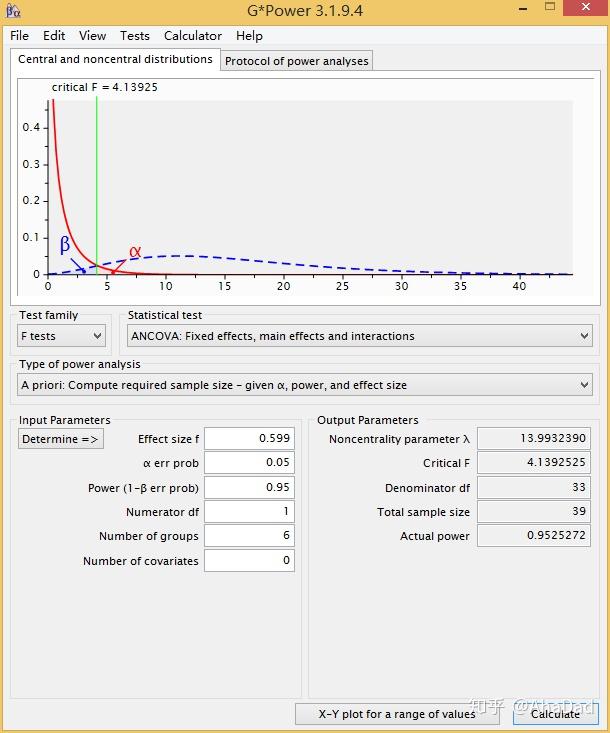

However, although very useful and popular, this software is not suitable for multivariate data or for non-parametric tests. Many researchers use G*Power to estimate the sample size required for their studies. It is recommended and sometimes even required to provide justification for sample size prior to starting a study and when reporting about it (Clayson et al., 2019).

0 kommentar(er)

0 kommentar(er)